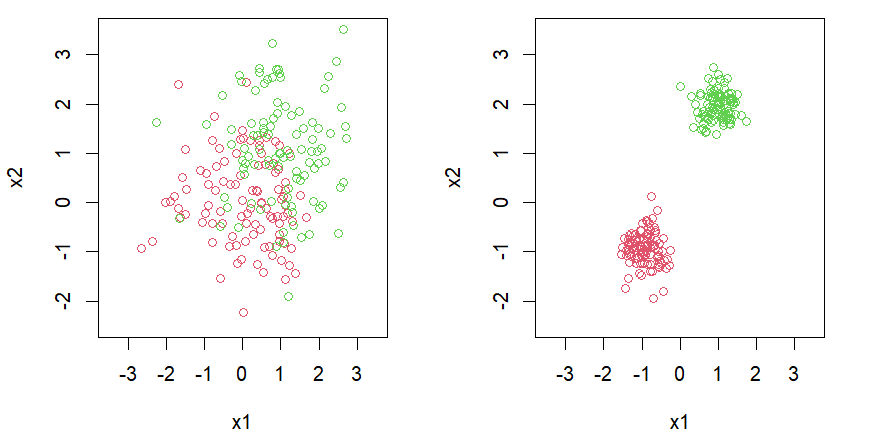

Linear Separable

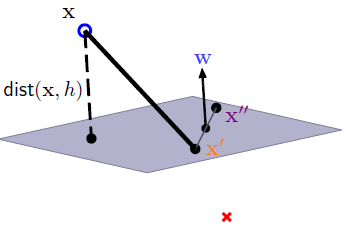

Distance

SVM

Hyperplane and data point with label

Let , then \quad

Denote as , as

If optimal with , we can scale to

Let , and we claim that

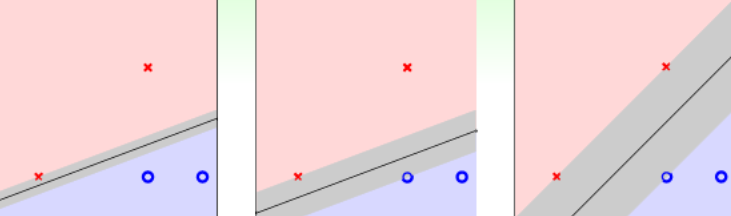

Violating : \\\

\qquad \qquad

Feasible : \\\

\qquad \qquad

Slater’s Condition

What’s more, the are the same

KKT Conditions

For convex optimization problem \\\ \qquad

Denote as , as . Assume the functions are differentiable. The KKT conditions are \begin{enumerate} \item \item \item \item \end{enumerate}

\qquad \quad

$$\begin{align*} & \underset{\forall \lambda_{i}{\text{arg max}} \geq 0} \left(\min _{b, \underset{\sim}{w}} L\left(b, \underset{\sim}{w}, \lambda_{i}\right)\right) \\\\\\ = & \underset{\forall \lambda_{i} \geq 0}{\text{arg max}} \left(\min _{b, \underset{\sim}{w}}\left(\frac{1}{2} \underset{\sim}{w}^{T} \underset{\sim}{w}+\sum_{i=1}^{N} \lambda_{i}\left(1-y_{i}\left(\underset{\sim}{w}^{T} \underset{\sim}{x_i}+b\right)\right)\right)\right) \\\\\\ = & \underset{\forall \lambda_{i} \geq 0}{\text{arg max} } \left(\min _{b, \underset{\sim}{w}} \left(\frac{1}{2} \underset{\sim}{w}^{T} \underset{\sim}{w}+\sum_{i=1}^{N} \lambda_{i}-\underset{\sim}{w}^{T} \underset{\sim}{w}+0\right)\right) \\\\\\ = & \underset{\forall \lambda_{i} \geq 0}{\text{arg max}} \left(-\frac{1}{2}\left|\sum_{i=1}^{N} \lambda_{i} y_{i} \underset{\sim}{x_i} \right|^{2}+\sum_{i=1}^{N} \lambda_{i}\right) \end{align*}$$Quadratic Programming

Rewrite as \ ,

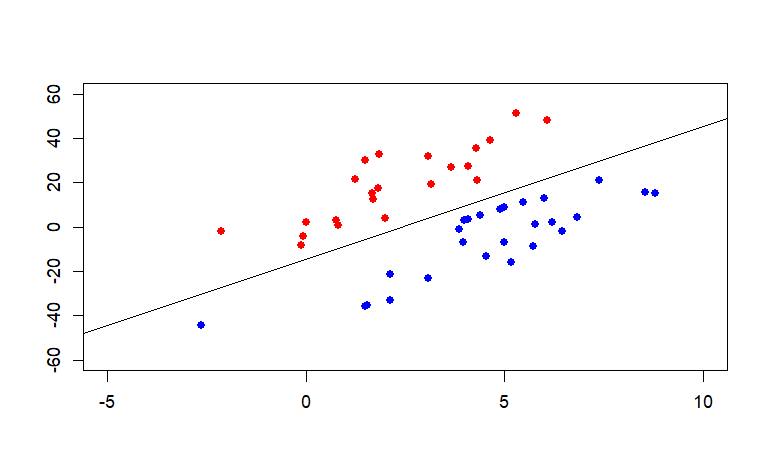

Example

Check the outcome of svm and QP are equal.

4 data points

| |

48 data points

| |

Reference

Convex Optimization

Method of Lagrange Multipliers

線代啟示錄

Karush-Kuhn-Tucker conditions pdf

Karush-Kuhn-Tucker conditions

林軒田-機器學習技法

An Idiot’s guide to Support vector machines

Support Vector Machine(with Numerical Example)

A Tutorial on Support Vector Machines for Pattern Recognition

Machine Learning Basics

RPubs SVM